|

|

- Search

| Healthc Inform Res > Volume 29(2); 2023 > Article |

|

Abstract

Objectives

The optic disc is part of the retinal fundus image structure, which influences the extraction of glaucoma features. This study proposes a method that automatically segments the optic disc area in retinal fundus images using deep learning based on a convolutional neural network (CNN).

Methods

This study used private and public datasets containing retinal fundus images. The private dataset consisted of 350 images, while the public dataset was the Retinal Fundus Glaucoma Challenge (REFUGE). The proposed method was based on a CNN with a single-shot multibox detector (MobileNetV2) to form images of the region-of-interest (ROI) using the original image resized into 640 ├Ś 640 input data. A pre-processing sequence was then implemented, including augmentation, resizing, and normalization. Furthermore, a U-Net model was applied for optic disc segmentation with 128 ├Ś 128 input data.

Currently, computer vision is widely applied in various fields, including medicine, to diagnose and analyze diseases based on medical image. The disorders diagnosed using medical images include thyroid cancer [1] on ultrasound images, back pain [2] on computed tomography scans, breast cancer [3] on mammograms, dental and oral diseases [4] on radiographic images, abnormalities of spinal intervertebral discs [5,6] on magnetic resonance image, and retinal diseasesŌĆödiabetes mellitus [7,8] and glaucoma [9,10]ŌĆöon fundus images. Glaucoma is an eye disease that may be the second-largest cause of blindness in the world. It is an incurable disease; hence it needs to be detected early.

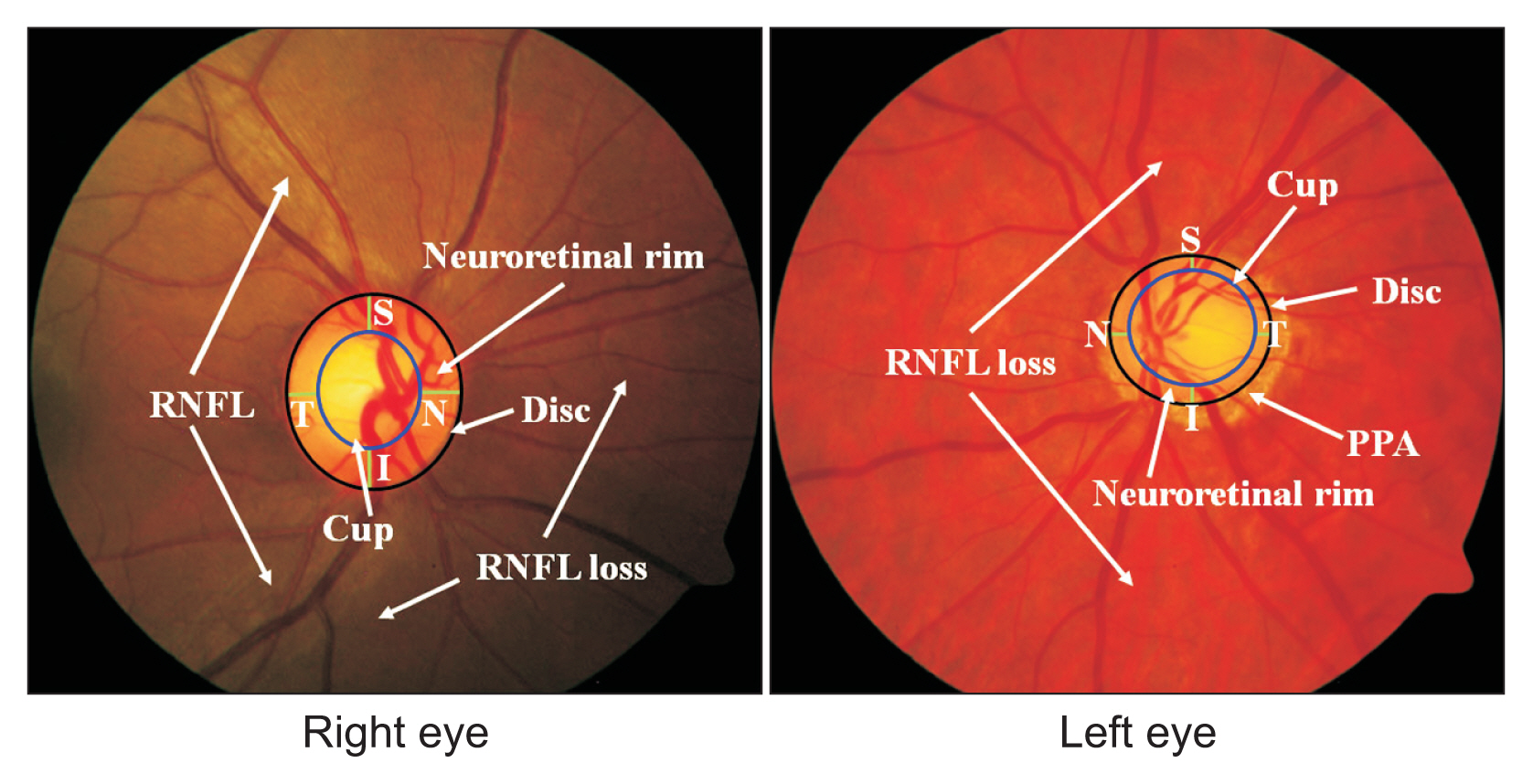

Ophthalmologists diagnose glaucoma manually by evaluating disease features based on retinal fundus images. However, these results may be subjective because they are influenced by differences in educational background, experiences, and psychological factors, especially when dealing with a large number of fundus images. The glaucoma features that need to be evaluated include the cup-to-disc ratio (CDR) [11,12], the neuroretinal rim (consisting of four sections: inferior, superior, nasal, and temporal), peripapillary atrophy (PPA) [13,14], and the retinal nerve fiber layer (RNFL) [15,16]. In previous studies, those features have been extracted and detected automatically. The disc and cup are light-colored round areas, where the cup is located within the disc, while the neuroretinal rim is the area between the disc and cup. Furthermore, PPA is an area outside the disc that presents a crescent-like shape; even in patients with severe glaucoma, PPA appears around the disc, shaped like a ring [14]. Meanwhile, RNFL is a bundle of white striations on the outside of the disc. In glaucoma patients, these striations tend to thinned and even to be lost [15]. The structure of fundus images of the right and left eyes is shown in Figure 1.

The evaluation of those features requires an optic disc segmentation process. Segmentation aims to distinguish the optic disc area from other objects considered as the background. The CDR is derived from the diameter of the optic disc. Meanwhile, PPA and RNFL feature extraction have to discard the optic disc area; therefore, optic disc segmentation is required. This process is still challenging due to differences in retinal structure among patients, as well as the presence of blood vessels that cover the margins of the optic disc. The main techniques that have been used for disc segmentation consist of clustering [11], superpixel classification [17], active contour [18], and thresholding [19]. Deep learning methods have recently been developed with various models [12,20ŌĆō23]. Prior to implementing the segmentation method, pre-processing is applied for several purposes, such as forming a sub-image that focuses on capturing the optic disc area [12,13,17,23,24] to reduce the computation time in subsequent processes and to remove the blood vessels [12,17ŌĆō20] in order to overcome the influence of their presence. Image enhancement has also been applied to clarify the edges of the disc using contrast-limited adaptive histogram equalization (CLAHE) [12,25], filtering [12,17,21,25], and color space adjustment [3,17,24].

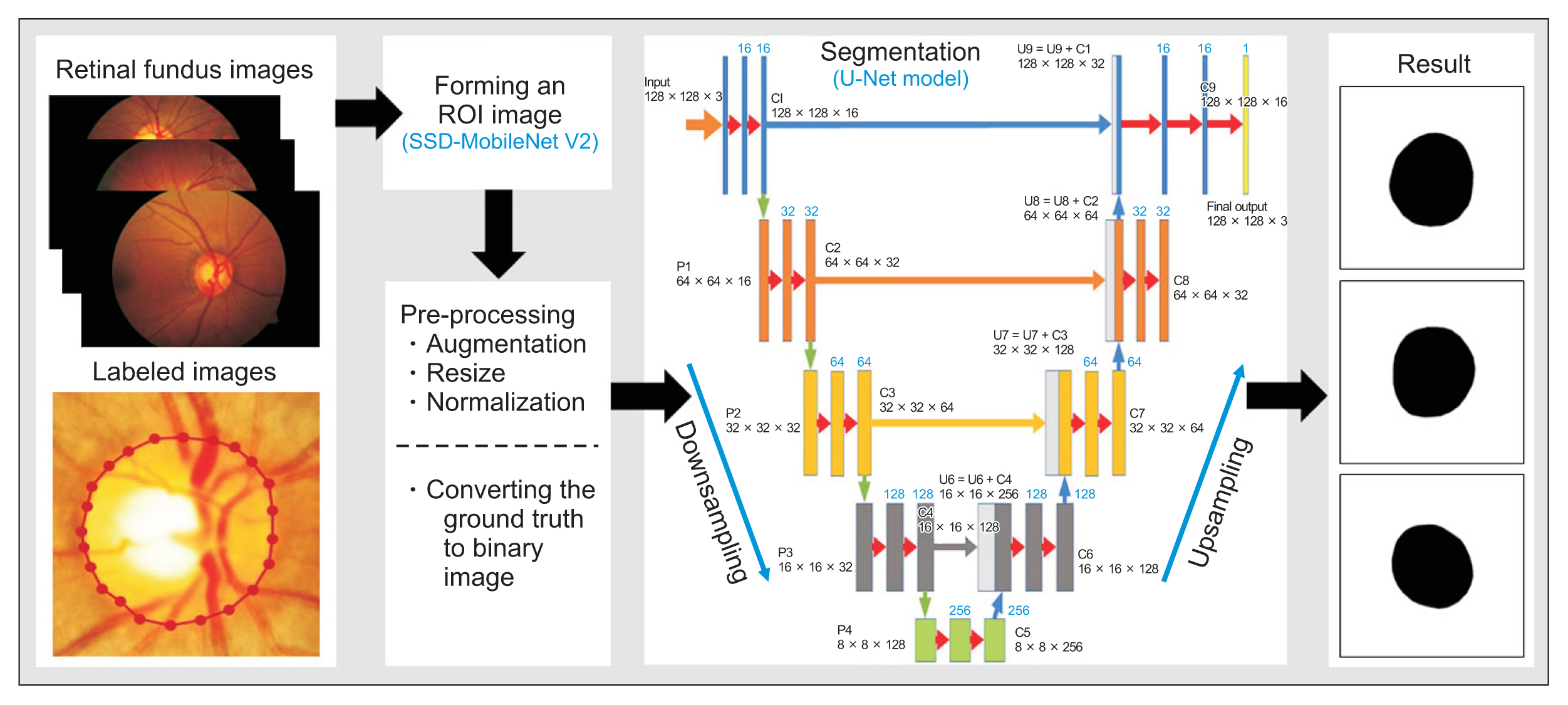

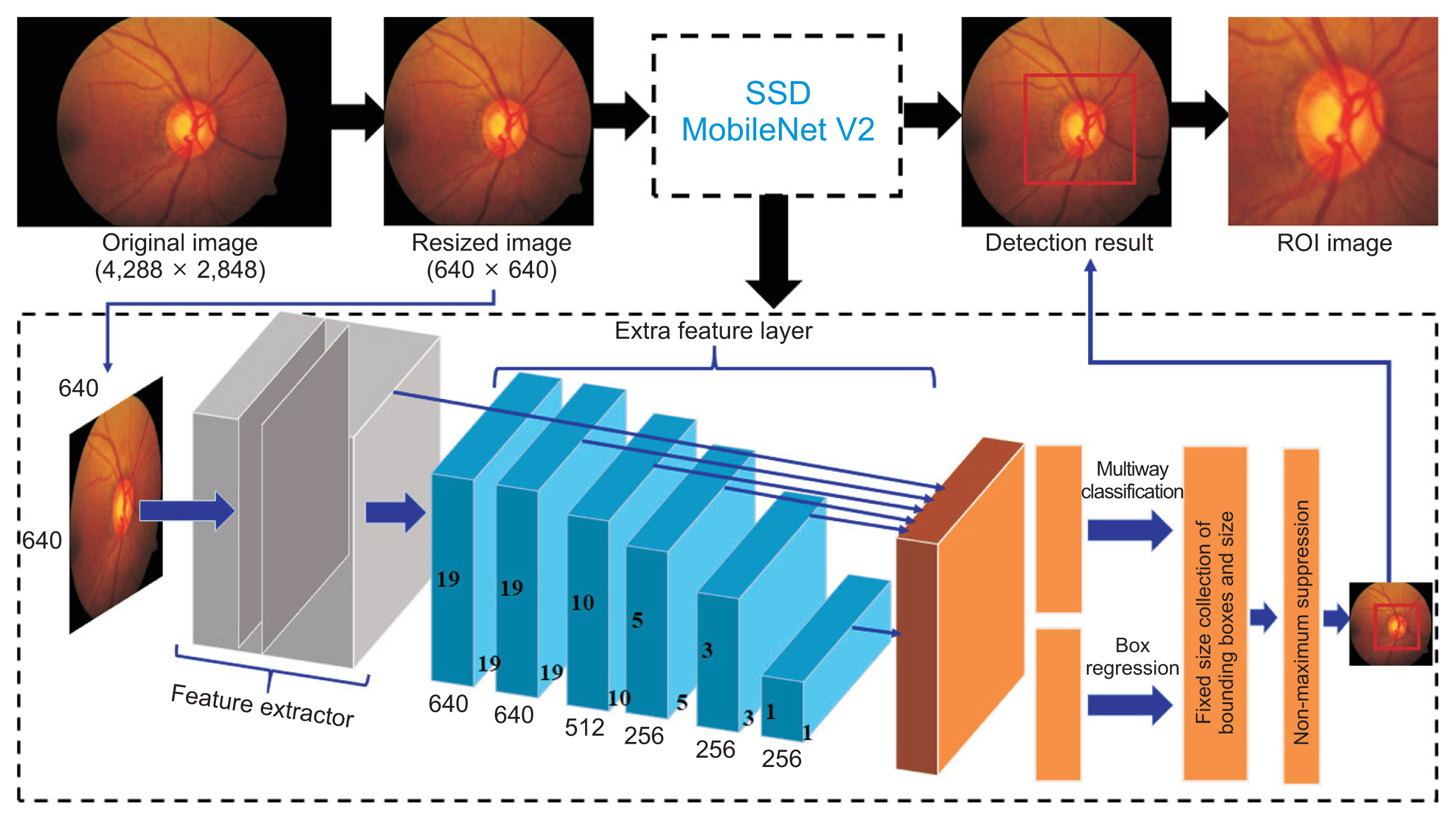

The optic disc is influential in evaluating features on retinal fundus images, especially those related to glaucoma. Research on optic disc segmentation is currently an exciting and developing field. Therefore, this study proposes an automatic method for optic disc segmentation on retinal fundus images required to evaluate glaucoma features. The method applies two convolutional neural network (CNN) architectures: single-shot multibox detector (SSD) MobileNetV2 for forming the region-of-interest (ROI) image and a U-Net model for segmenting the optic disc area, with image inputs of 256 ├Ś 256 and 128 ├Ś 128 in size, respectively. Between both processes, it is necessary to perform pre-processing, which involves augmentation, resizing, and normalization.

The rest of this paper is organized as follows. The proposed method of optic disc segmentation, including a description of the datasets used in this study, the formation of ROI images, pre-processing, and the implementation of the U-Net model, is discussed in Section II. The performance evaluation of the proposed method is reported in Section III. Finally, Section IV discusses the results and concludes this study.

This study used two datasets to evaluate the proposed method: (1) a private dataset and (2) a public dataset, namely Retinal Fundus Glaucoma Challenge (REFUGE). The private dataset consists of 350 retinal fundus images collected from normal and glaucomatous eyes in Dr. YAP Eye Hospital, Yogyakarta, Indonesia. Approval for the private dataset was obtained from the Ethics Committee (No. IRB KE/FK/0552/EC/2018). Those images were captured at 4288 ├Ś 2848 pixels in JPEG format using a fundus camera with a 30┬░ field-of-view (Carl Zeiss AG, Oberkochen, Germany) and an N150 Nikon digital camera (Nikon, Tokyo, Japan). For all fundus images, the ground truths of the optic disc boundaries were provided by an ophthalmologist with 11 years of experience. Meanwhile, the 1,200 images in REFUGE were acquired using a Zeiss Visucam 500 and Canon CR-2 (Canon, Tokyo, Japan) [26]. The public dataset was used to test the robustness of the proposed method, as described elsewhere [25,27]. The images in each dataset were divided into three sets: training, validation, and testing data, corresponding to 70% (245 images from the private dataset and 840 images from REFUGE), 10% (35 images from the private dataset and 120 images from REFUGE), and 20% (70 images from the private dataset and 240 images from REFUGE), respectively. Both datasets were used separately to train the model with a deep learning structure and evaluate the proposed method.

The proposed method aimed to segment the optic disc on retinal fundus images automatically. There were three main processes: forming an ROI image, pre-processing, and segmentation. Pre-processing involved augmentation, resizing, and normalization. Additionally, the labeled images provided by the ophthalmologist were converted into binary images to form the ground truth. The ground truth was needed in the segmentation to form the structure of the U-Net model. An illustration of the main processes of the proposed method is depicted in Figure 2.

The aim of forming an ROI image is to obtain a sub-image with a smaller size (height ├Ś weight) than the original image by reducing the background area and focusing on the optic disc area, as shown in Figure 3. Consequently, the subsequent process becomes simpler and requires less computing time. This process was applied based on a CNN approach with an original retinal fundus image as the input data. Initially, the original image of 4288 ├Ś 2848 pixels was cropped on the edge (black area), then resized to 640 ├Ś 640 pixels. It was fed into the learning model that was applied to detect the optic disc using the SSD MobileNetV2. In addition, transfer learning was implemented using the COCO17 dataset to train the architecture of MobileNetV2 [28]. The edge of the ROI image did not intersect with the disc boundary to obtain more optimal segmentation results. An overview of the process of forming an ROI image and the SSD architecture is depicted in Figure 3.

Pre-processing was applied to increase the specificity of the main object and enable a better interpretation. This process aimed to simplify the subsequent process and reduce the computation time. Several sub-processes were applied: augmentation, normalization, and resizing, as well as converting the labeled image by an ophthalmologist into a binary image as the ground truth. Initially, the datasets were augmented to increase the variety and the number of images. Hence, the robustness and generalization of the training model were improved. Augmentation was implemented by flipping based on the vertical and horizontal axes [22,23,29]. The following process aimed to resize images of 640 ├Ś 640 pixels to 128 ├Ś 128 pixels as in [3,12]. Furthermore, normalization was conducted by converting all the intensity values into a range from 0 to 1 [23].

The proposed method implemented a U-Net architecture to train the dataset, which has been successfully applied to segmenting several kinds of medical images [2,3,23]. This architecture included two paths: downsampling and upsampling. Both of them applied the rectified linear unit (ReLU) as the activation function. In downsampling, two convolution layers of 3 ├Ś 3 in size were repeated in each step of downsampling with the same number of feature channels. Subsequently, maxpooling of 2 ├Ś 2 with two strides was carried out. In each step in downsampling, the number of feature channels was increased gradually from 16 to 256. On the contrary, in the upsampling path, the number of feature channels was decreased from 256 to 16. Furthermore, the up-convolutional layer of 2 ├Ś 2 and two convolution layers of 3 ├Ś 3 were carried out sequentially. Images with a size of 128 ├Ś 128 pixels were fed into the training model. This model used the MobileNetV2 encoder and implemented several hyperparameters, with 100 epochs, a batch size of 16, and a learning rate of 0.00001 [25]. The model training was implemented on a personal computer with a graphics processing unit (GPU) (NVIDIA GeForce GTX 1070) with a memory size of 8 GB and a 3.40 GHz Intel Core i7-3770k CPU.

The performance of the proposed optic disc segmentation method was evaluated using five evaluation parameters: precision, recall, the F-score, the dice score (DS), and intersection over union (IoU) [24,27]. Those parameters are calculated as follows:

where true positive (TP) is the number of pixels classified as disc area on a ground truth image and the proposed method, false positive (FP) is the number of pixels classified as non-optic disc area on a ground truth image but classified as disc area by the proposed method, and false negative (FN) is the number of pixels classified as optic disc area on a ground truth image but classified as non-optic disc area by the proposed method. The values of precision, recall, and the F-score lie in the range of 0 to 1. Values close to 1 indicate the accuracy of the proposed method.

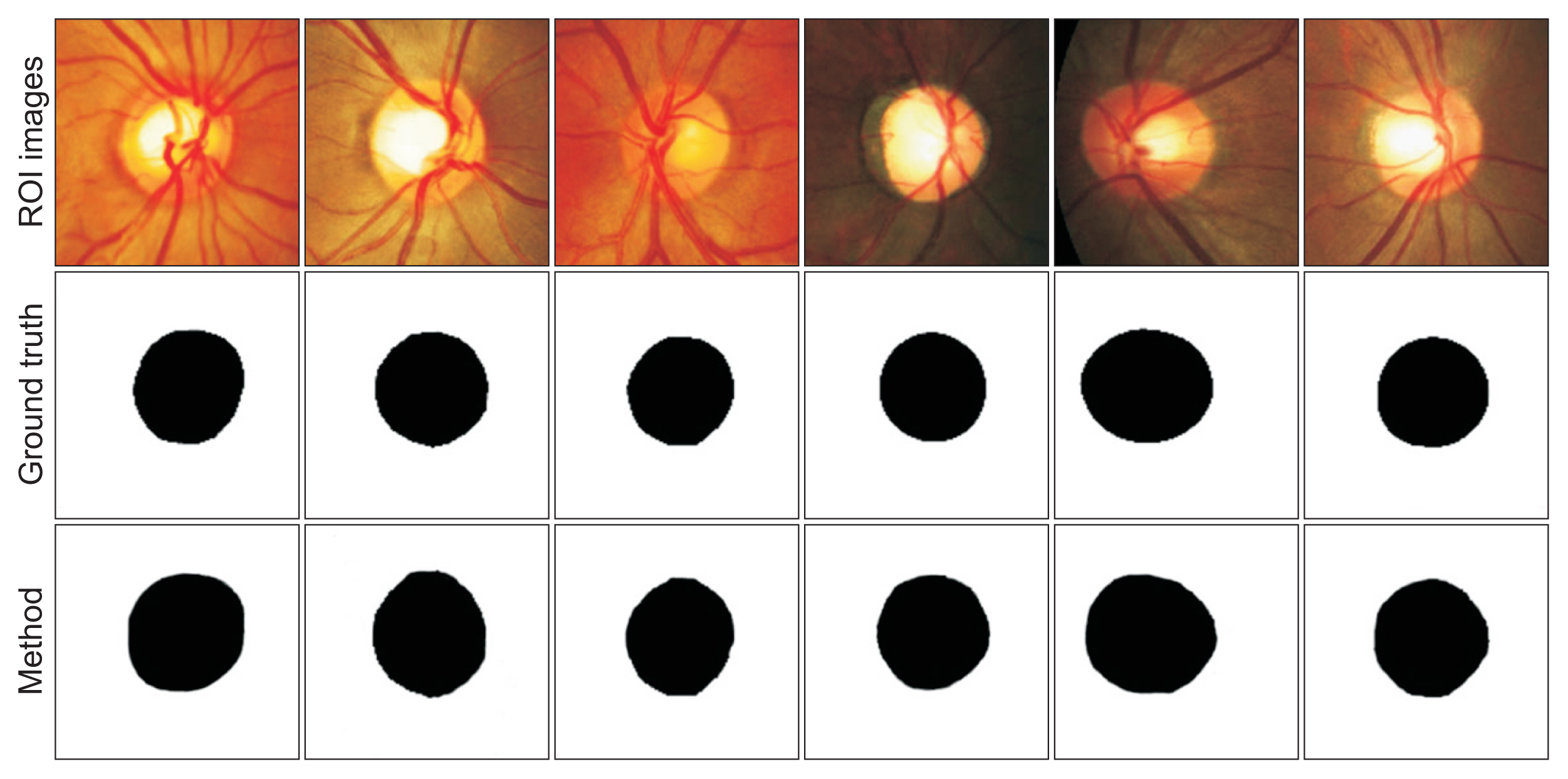

The proposed method was evaluated using retinal fundus images with various structures and image quality. This diversity may affect the results of optic disc segmentation, especially in images containing PPA and cup sizes that are close to the sizes of the discs. An example of a comparison of several disc segmentation results by the ophthalmologist (ground truth) and the proposed method is shown in Figure 4. The performance of the segmentation method of all data is summarized in Table 1.

Figure 4 shows examples of the disc segmentation images obtained from the two datasets: private in columns 1ŌĆō3 and REFUGE in columns 4ŌĆō6. The proposed method misclassified the optic disc area in retinal fundus images containing mild PPA, which caused over-segmentation. Another error generally occurred in patients with severe glaucoma with an optic cup size close to that of the optic disc. Meanwhile, Table 1 shows that the proposed method showed high performance with the two datasets, as demonstrated by F-score, DS, and IoU values of 0.9880, 0.9852, and 0.9763 in the private dataset (computation time: 17 minutes and 56 seconds) and 0.9854, 0.9838, and 0.9712 in the REFUGE dataset (computation time: 59 minutes and 16 seconds). Although the private dataset contained significantly fewer images than the REFUGE dataset, only slight performance discrepancies were observed.

An automatic optic disc segmentation method was developed using CNN and U-Net model training. A CNN model was used to form ROI images to reduce the computation time. Subsequently, augmentation and resizing of the ROI image were implemented, followed by normalization of the intensity values. Meanwhile, a U-Net model was applied to segment the optic disc. The proposed method was tested using two datasets (private and REFUGE). The performance of the proposed method was successful, with high values of the F-score, DS, and IoU (0.9880, 0.9852, and 0.9763, respectively, in the private dataset; 0.9854, 0.9838 and 0.9712, respectively, in the REFUGE dataset). These results show that the proposed method is suitable for application to the private dataset and the REFUGE dataset.

Table 2 compares the results of the proposed method with those other methods from previous studies that used the REFUGE dataset, using the F-score and DS [23,25,27,29]. The proposed method successfully achieved F-score and DS values of 0.9854 and 0.9838, respectively. These results show that the performance of the proposed method surpassed the other methods. The proposed method may support the ophthalmologist to automatically localise the optic disc based on fundus images to analyse the occurrence of glaucoma disease. In future work, various segmentation methods can be used to overcome the erroneous and improve accuracy.

Acknowledgments

The authors would like to thank KEMENDIKBUD RISTEK Indonesia for the funding of research in the scheme of Penelitian Dasar in 2021 (Grant No. 594/UN17.l1/PG/2021).

Figure┬Ā1

Structure of retinal fundus images from the right and left eyes. RNFL: retinal nerve fiber layer, PPA: peripapillary atrophy.

Figure┬Ā3

Overview of the process of forming a region-of-interest (ROI) image and the resulting image in each step. SSD: single-shot detector.

Figure┬Ā4

Examples of the optic disc segmentation results obtained by the ophthalmologist (ground truth) and the proposed method. ROI: region-of-interest.

Table┬Ā1

Comparison of the segmentation results of the private dataset and REFUGE dataset

| Dataset | Precision | Recall | F-score | DS | IoU | Computation time |

|---|---|---|---|---|---|---|

| Private | 0.9992 | 0.9761 | 0.9880 | 0.9852 | 0.9763 | 0 hr 17 min 56 s |

| REFUGE | 0.9982 | 0.9718 | 0.9854 | 0.9838 | 0.9712 | 0 hr 59 min 16 s |

Table┬Ā2

Comparison of the performance of the proposed method with other methods on the REFUGE dataset

| Study | Year | Method | Evaluation parameter | |

|---|---|---|---|---|

| F-score | DS | |||

| Bian et al. [23] | 2020 | Two cascaded U-shape networks | 0.9367 | 0.9331 |

| Yuan et al. [25] | 2021 | W-shape CNN with multi-scale input | 0.9644 | - |

| Wang et al. [26] | 2021 | Feature detection sub-network and a cross-connection sub-network | - | 0.9692 |

| Zhang et al. [28] | 2021 | Transferable attention U-Net model | - | 0.9600 |

| Proposed method | 2021 | CNN with SSD MobileNetV2 and U-Net model | 0.9854 | 0.9838 |

References

1. Nugroho HA, Frannita EL, Ardiyanto I, Choridah L. Computer aided diagnosis for thyroid cancer system based on internal and external characteristics. J King Saud Univ-Comput Inf Sci 2021 33(3):329-39. https://doi.org/10.1016/j.jksuci.2019.01.007

2. Kim YJ, Ganbold B, Kim KG. Web-based spine segmentation using deep learning in computed tomography images. Healthc Inform Res 2020 26(1):61-7. https://doi.org/10.4258/hir.2020.26.1.61

3. Soulami KB, Kaabouch N, Saidi MN, Tamtaoui A. Breast cancer: one-stage automated detection, segmentation, and classification of digital mammograms using UNet model based-semantic segmentation. Biomed Signal Process Control 2022 66:102481.https://doi.org/10.1016/j.bspc.2021.102481

4. Sela EI, Pulungan R, Widyaningrum R, Shantiningsih RR. Method for automated selection of the trabecular area in digital periapical radiographic images using morphological operations. Healthc Inform Res 2019 25(3):193-200. https://doi.org/10.4258/hir.2019.25.3.193

5. Das P, Pal C, Acharyya A, Chakrabarti A, Basu S. Deep neural network for automated simultaneous intervertebral disc (IVDs) identification and segmentation of multi-modal MR images. Comput Methods Programs Biomed 2021 205:106074.https://doi.org/10.1016/j.cmpb.2021.106074

6. Li X, Dou Q, Chen H, Fu CW, Qi X, Belavy DL, et al. 3D multi-scale FCN with random modality voxel dropout learning for intervertebral disc localization and segmentation from multi-modality MR images. Med Image Anal 2018 45:41-54. https://doi.org/10.1016/j.media.2018.01.004

7. Makroum MA, Adda M, Bouzouane A, Ibrahim H. Machine learning and smart devices for diabetes management: systematic review. Sensors (Basel) 2022 22(5):1843.https://doi.org/10.3390/s22051843

8. Kumar S, Adarsh A, Kumar B, Singh AK. An automated early diabetic retinopathy detection through improved blood vessel and optic disc segmentation. Opt Laser Technol 2020 121:105815.https://doi.org/10.1016/j.optlastec.2019.105815

9. Septiarini A, Hamdani , Khairina DM. The contour extraction of cup in fundus images for glaucoma detection. Int J Electr Comput Eng 2016 6(6):2797-804. http://doi.org/10.11591/ijece.v6i6.pp2797-2804

10. Abdullah F, Imtiaz R, Madni HA, Khan HA, Khan TM, Khan MA, et al. A review on glaucoma disease detection using computerized techniques. IEEE Access 2021 9:37311-33. https://doi.org/10.1109/ACCESS.2021.3061451

11. Thakur N, Juneja M. Optic disc and optic cup segmentation from retinal images using hybrid approach. Expert Syst Appl 2019 127:308-22. https://doi.org/10.1016/j.eswa.2019.03.009

12. Veena HN, Muruganandham A, Kumaran TS. A novel optic disc and optic cup segmentation technique to diagnose glaucoma using deep learning convolutional neural network over retinal fundus images. J King Saud Univ-Comput Inf Sci 2022 34(8):6187-98. https://doi.org/10.1016/j.jksuci.2021.02.003

13. Septiarini A, Pulungan R, Harjoko A, Ekantini R. Peripapillary atrophy detection in fundus images based on sectors with scan lines approach. Proceedings of 2018 3rd International Conference on Informatics and Computing (ICIC); 2018 Oct 17ŌĆō18. Palembang, Indonesia; p. 1-6. https://doi.org/10.1109/IAC.2018.8780490

14. Sharma A, Agrawal M, Roy SD, Gupta V, Vashisht P, Sidhu T. Deep learning to diagnose Peripapillary Atrophy in retinal images along with statistical features. Biomedical Signal Processing and Control 2021 64:102254.https://doi.org/10.1016/j.bspc.2020.102254

15. Odstrcilik J, Kolar R, Tornow RP, Jan J, Budai A, Mayer M, et al. Thickness related textural properties of retinal nerve fiber layer in color fundus images. Comput Med Imaging Graph 2014 38(6):508-16. https://doi.org/10.1016/j.compmedimag.2014.05.005

16. Septiarini A, Harjoko A, Pulungan R, Ekantini R. Automated detection of retinal nerve fiber layer by texture-based analysis for glaucoma evaluation. Healthc Inform Res 2018 24(4):335-45. https://doi.org/10.4258/hir.2018.24.4.335

17. Rehman ZU, Naqvi SS, Khan TM, Arsalan M, Khan MA, Khalil MA. Multi-parametric optic disc segmentation using superpixel based feature classification. Expert Syst Appl 2019 120:461-73. https://doi.org/10.1016/j.eswa.2018.12.008

18. Zulfira FZ, Suyanto S, Septiarini A. Segmentation technique and dynamic ensemble selection to enhance glaucoma severity detection. Comput Biol Med 2021 139:104951.https://doi.org/10.1016/j.compbiomed.2021.104951

19. Septiarini A, Harjoko A, Pulungan R, Ekantini R. Optic disc and cup segmentation by automatic thresholding with morphological operation for glaucoma evaluation. Signal Image Video Process 2017 11:945-52. https://doi.org/10.1007/s11760-016-1043-x

20. Fu Y, Chen J, Li J, Pan D, Yue X, Zhu Y. Optic disc segmentation by U-Net and probability bubble in abnormal fundus images. Pattern Recognit 2021 117:107971.https://doi.org/10.1016/j.patcog.2021.107971

21. Hasan MK, Alam MA, Elahi MT, Roy S, Marti R. DRNet: segmentation and localization of optic disc and fovea from diabetic retinopathy image. Artif Intell Med 2021 111:102001.https://doi.org/10.1016/j.artmed.2020.102001

22. Yu S, Xiao D, Frost S, Kanagasingam Y. Robust optic disc and cup segmentation with deep learning for glaucoma detection. Comput Med Imaging Graph 2019 74:61-71. https://doi.org/10.1016/j.compmedimag.2019.02.005

23. Bian X, Luo X, Wang C, Liu W, Lin X. Optic disc and optic cup segmentation based on anatomy guided cascade network. Comput Methods Programs Biomed 2020 197:105717.https://doi.org/10.1016/j.cmpb.2020.105717

24. Zilly J, Buhmann JM, Mahapatra D. Glaucoma detection using entropy sampling and ensemble learning for automatic optic cup and disc segmentation. Comput Med Imaging Graph 2017 55:28-41. https://doi.org/10.1016/j.compmedimag.2016.07.012

25. Yuan X, Zhou L, Yu S, Li M, Wang X, Zheng X. A multi-scale convolutional neural network with context for joint segmentation of optic disc and cup. Artif Intell Med 2021 113:102035.https://doi.org/10.1016/j.artmed.2021.102035

26. Orlando JI, Fu H, Barbosa Breda J, van Keer K, Bathula DR, Diaz-Pinto A, et al. REFUGE challenge: a unified framework for evaluating automated methods for glaucoma assessment from fundus photographs. Med Image Anal 2020 59:101570.https://doi.org/10.1016/j.media.2019.101570

27. Zhang Y, Cai X, Zhang Y, Kang H, Ji X, Yuan X. TAU: transferable attention U-Net for optic disc and cup segmentation. Knowl Based Syst 2021 213:106668.https://doi.org/10.1016/j.knosys.2020.106668

28. Zhang L, Lim CP. Intelligent optic disc segmentation using improved particle swarm optimization and evolving ensemble models. Appl Soft Comput 2020 92:106328.https://doi.org/10.1016/j.asoc.2020.106328

29. Wang L, Gu J, Chen Y, Liang Y, Zhang W, Pu J, et al. Automated segmentation of the optic disc from fundus images using an asymmetric deep learning network. Pattern Recognit 2021 112:107810.https://doi.org/10.1016/j.patcog.2020.107810

- TOOLS

-

METRICS

- Related articles in Healthc Inform Res

-

Web-Based Spine Segmentation Using Deep Learning in Computed Tomography Images2020 January;26(1)