Automated Detection of Retinal Nerve Fiber Layer by Texture-Based Analysis for Glaucoma Evaluation

Article information

Abstract

Objectives

The retinal nerve fiber layer (RNFL) is a site of glaucomatous optic neuropathy whose early changes need to be detected because glaucoma is one of the most common causes of blindness. This paper proposes an automated RNFL detection method based on the texture feature by forming a co-occurrence matrix and a backpropagation neural network as the classifier.

Methods

We propose two texture features, namely, correlation and autocorrelation based on a co-occurrence matrix. Those features are selected by using a correlation feature selection method. Then the backpropagation neural network is applied as the classifier to implement RNFL detection in a retinal fundus image.

Results

We used 40 retinal fundus images as testing data and 160 sub-images (80 showing a normal RNFL and 80 showing RNFL loss) as training data to evaluate the performance of our proposed method. Overall, this work achieved an accuracy of 94.52%.

Conclusions

Our results demonstrated that the proposed method achieved a high accuracy, which indicates good performance.

I. Introduction

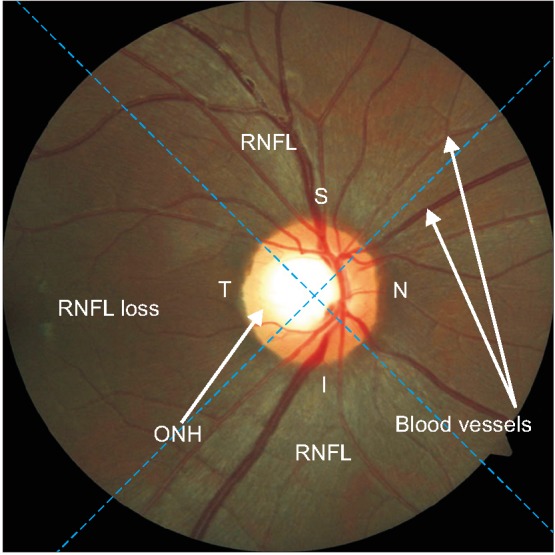

The retinal nerve fiber layer (RNFL) is part of the retina located on the outside of the optic nerve head (ONH), which can be observed in a retinal fundus image. Visually, the ONH is a bright rounded or slightly oval area, and the RNFL is an area with distinctive texture that appears like a collection of striations with a whitish color. In normal eyes, the texture of the RNFL is clearly visible and distributed evenly in all sectors, while in glaucoma patients, the structure of the RNFL tends to be lost, which indicates defects of the RNFL [1].

A retinal fundus image is divided into four sectors, namely inferior (I), superior (S), nasal (N), and temporal (T). Sectors I and S are at the top and bottom, respectively. In the right eye, sectors N and T are on the right and left sides, and vice versa for the left eye. The thickest and most clearly visible parts of the RNFL structure are located in sectors I and S [2]. An overview of the retinal texture, which shows the differences between areas with and without (loss) RNFL and also the sector partition on retinal fundus image of the right eye, is shown in Figure 1.

Overview of the retinal structure in retinal fundus image of the right eye and of the sector partition. RNFL: retinal nerve fiber layer, ONH: optic nerve head.

The RNFL is a site of glaucomatous optic neuropathy, whose early changes need to be detected [1]. The detection of RNFL loss is necessary because glaucoma is an incurable disease that may cause permanent blindness. Glaucoma is the second most common cause of blindness in the world [3], and the number of glaucoma sufferers is expected to increase in 2020 [4]. Symptoms of glaucoma are generally not felt by the sufferer, and when glaucoma is finally detected, the damage to the structure of the retina may already be severe.

There are several digital imaging technologies that can be used to observe RNFL structures, namely optical coherence tomography (OCT), confocal scanning laser ophthalmoscopy, scanning laser polarimetry, and fundus camera imaging [5]. In this research, we used images produced by fundus cameras to analyze the RNFL because they are more available in public healthcare and cheaper than other tools. In addition, fundus images can be utilized to diagnose and monitor other diseases, such as diabetes, hypertension, coronary heart disease, and stroke [6].

The RNFL can actually be easily detected by an ophthalmologist, but in certain cases, such as when the RNFL has an indistinct structure or for a large number of images, detection may be too time consuming and the result of RNFL detection may become subjective. Therefore, several approaches have been developed for computer-aided RNFL detection. To detect the RNFL, ONH segmentation is required because the area of the ONH does not contain the RNFL and must be eliminated. It is preceded by ONH localization, which is conducted to obtain a sub-image. The sub-image captures the whole ONH to simplify the subsequent process. This process is applied based on the green channel to predict the pixel candidates of the ONH [789]. Furthermore, in several previous works, segmentation of the ONH is implemented in the red channel because the ONH can be easily identified in this channel [9101112].

Works related to segmentation of the ONH are still scant and several previous studies are briefly summarized here. Two commonly used methods, namely threshold-based [91213] and active contour [781415], have been developed for ONH segmentation in the last 5 years. Further, fully automatic ONH segmentation by template matching was developed in, in which the superpixel method is applied by histograms and center surround statistics to determine the ONH and non-ONH area [16]. Moreover, an optimal framework by radon transformation of multi-overlapping windows was developed in [10]. In [17], the multiresolution sliding band filter (SBF) method is applied to predict the boundary of the ONH. In general, segmentation of the ONH yields a misclassification area that can be overcome using morphological filtering as in [791318].

Only few studies related to RNFL detection based on fundus image processing have been conducted. Progression based on fundus images has been performed in [1920] by comparing the results to the information in OCT images, while in [212223], the results are compared to the clinical data obtained from experts. A computer-aided method for analysis of the RNFL was proposed in [20] using texture analysis for prediction of the RNFL thickness with Gaussian Markov random fields (GMRF) and local binary patterns. Fundus images with blue and green layers from camera filtering by the standard blue-green filter BPB 45 provide the grayscale images used as experimental data in [19]. Texture analysis is also conducted in [22] by applying the fractal dimension method, while in [23] analysis of the RNFL is implemented by combining color and texture features. The color features are formed by two-color histograms computed in RGB and Lab color spaces. Meanwhile, the texture feature is obtained in the RGB space using local binary patterns. Furthermore, the quantification of the dark area around the ONH is performed in [21].

In this paper, we propose a method for automated detection of the RNFL based on the texture feature by forming a co-occurrence matrix derived from small areas (patches) outside the ONH where the RNFL is present, which are part of the retinal fundus image; this is the cornerstone of our contribution. Unlike the existing approach, which discards areas with blood vessels from the focal areas that need to be extracted, in this work, the extraction area may contain blood vessels. The area with blood vessels needs to be considered because the RNFL is easily detectable in this area. Another contribution is that we apply the backpropagation neural network (BPNN) method in classification to detect the presence of the RNFL on all patches, followed by discarding the isolated patches based on the neighbors in 8 directions to determine the presence of the RNFL in a sector.

The rest of this paper is organized as follows. The proposed method of RNFL detection, including its feature extraction and classification, are discussed in Section II. The datasets used in this research along with the performance of the proposed method based on our experiments are reported in Section III. Finally, Section IV discusses the results and concludes this paper.

II. Methods

We propose an automated RNFL detection method for application to retinal fundus images. This method is divided into two phases: the forming of features and testing, where each phase consists of several processes. In the feature forming stage, we obtain the feature values of several sample images used in the testing phase, while the testing phase aims to detect the RNFL in all sector. The framework of our proposed method is presented in Figure 2.

Stage diagram of the proposed retinal nerve fiber layer (RNFL) detection method. ROI: region of interest, ONH: optic nerve head.

1. Forming of Features

The input of this phase is a collection of sample images and class labels. The sample images are 50 × 50 pixels selected manually in RGB color, and the label class of each sample image is ‘0’ for RNFL loss or ‘1’ for RNFL. The number of sample images is 160, consisting of 80 images with RNFL and the rest without RNFL. The required processes in this phase are converting the sample image to a grayscale image with 256 levels, as in [22], followed by feature extraction. Feature extraction is applied for texture analysis using the gray level co-occurrence matrix (GLCM) method. The space of the co-occurrence matrix formed is in four directions, i.e., 0°, 45°, 90°, and 135°. Subsequently, we compute the value of 10 texture features (energy, contrast, correlation, homogeneity, entropy, autocorrelation, dissimilarity, cluster shade, cluster prominence, and maximum probability) as in [24] because they have been widely used to perform texture analysis, especially in the biomedical field. In this case, 40 features are obtained. Then to simplify the following process, a feature selection process is performed on medical images to reduce the dimensionality of the features [2526] using the correlation-based feature subset selection (CFS) method as in [2728]. Feature selection is carried out using Weka version 3.8 as in our previous work [26]. It obtains two selected features, namely, autocorrelation 0° (f1) and correlation 90° (f2). Finally, this phase saves the features and class labeling of all sample images for use in the classification process in the testing phase.

2. Testing Phase

This phase consists of three main processes, namely ROI (region of interest) detection, feature extraction, and classification. The aims of these processes are (1) ROI detection to form the sub-image (ROI image) capturing the extraction area of the RNFL, (2) feature extraction to obtain the features of the RNFL, and (3) classification to determine whether a particular area contains the RNFL or not. The stages of these processes are described in the following subsections.

1) ROI detection

The input of this process is a retinal fundus image (Figure 3A) to form an ROI image that focuses on the outside of the ONH area. This process consists of two main sub-processes, namely ONH localization and ONH segmentation. ONH localization is done based on our previous work [9], which begins by thresholding with a high threshold value on the green channel because the central part of the ONH is more easily distinguished in this channel. The result of thresholding may produce a non-ONH area at the edge of the retina, and this needs to be removed by using a border mask. This masking is performed by thresholding on the red channel. Afterwards, edge detection is carried out by the Sobel method, followed by a morphological operation, namely, dilation. Finally, the center estimate of the ONH is set as the center of the ROI image.

Original image and the results of region of interest (ROI) detection: (A) retinal fundus image, (B) boundary of optic nerve head (ONH), (C) updated position of ONH, and (D) ROI image with sector division.

In general, the position of the ONH is not exactly in the center of the ROI image (Figure 3B); therefore, the ROI image must be updated by ONH segmentation. This is done to precisely identify the center of the ONH by separating the ONH area from the background. Initially, in ONH segmentation median filtering is applied to an ROI image in the red channel, followed by thresholding by the Otsu method. Then, two morphological operations, opening and dilation, are carried out. Both operations aim to remove non-ONH area that coincides and to produce the appropriate size of ONH. Lastly, ellipse fitting is performed, and the result can be seen in Figure 3B.

The area of RNFL feature extraction is divided into four sectors on the outside of the ONH. To produce sectors with similar size, an ROI image update is needed, in which the position of the ONH is updated to the center of the ROI image as shown in Figure 3C. This updating process is based on the center of the ONH area derived from the result of ONH segmentation. The last step in this process is to remove the area of the ONH since the RNFL is outside of ONH and then to update the ROI image, whose size is twice as large as the previous ROI image (Figure 3D). This size is selected because of its ability to distinguish the presence or absence of the RNFL in retinal fundus images.

2) Features extraction

The input of this process is an ROI image (Figure 3D). This process begins by converting the ROI image into a grayscale image. Subsequently, sub-sectors are formed based on a 30° angle at the center of the image in each sector. Therefore, the ROI image consists of 12 sub-sectors, in which each subsector has relatively the same size of area as seen in Figure 2B. Subsequently, patches with the size of 50 × 50 pixels are formed in each sub-sector, and this is done with a nonoverlapping technique, as shown in Figure 2C. Finally, the extraction of selected features is carried out in each patch that does not contain background pixels. The computation of feature values is performed based on the selected features (f1 and f2).

3) Classification

We apply three classifiers, namely, k-nearest neighbor (k-NN), support vector machine (SVM) and BPNN to produce the optimal accuracy and justify our proposed method. Those methods are applied to detect the RNFL in all patches. In this work, the implementation of BPNN obtains higher accuracy than the other classifiers. This method is followed by discarding the isolated patches based on the neighbors in eight directions because the RNFL is a collection of brightly colored striations. Therefore, if a sub-sector contains the RNFL it should be detected by several interconnected patches. After this step, the conclusions of the RNFL detection process in each sub-sector can be drawn. In this work, we prefer to use RGB images, as in [22], rather than red-free images because the classification results show that mild RNFL loss tends to be undetectable in red-free images. Classification results obtained using an RGB image and a red-free image in a sub-sector are presented in Figure 4A and 4B.

Examples of classification results on each patch in a sub-sector with red-free (A) and RGB (B) images.

Figure 5 compares RNFL detection results obtained using three different classifiers in several sub-sectors: BPNN, SVM, and k-NN. The results of BPNN, SVM, and k-NN are shown in Figure 5A, 5B, and 5C, respectively. The images in the first and second rows were identified as images with the RNFL by an ophthalmologist. In the first row, all methods successfully detect the presence of the RNFL with a different number of the patches identified as areas with the RNFL. However, based on the number of patches in which the presence of RNFL was successfully detected, BPNN was more successful than the other methods. As seen in the second row, BPNN and SVM successfully detected the presence of the RNFL in this sub-sector. Meanwhile, k-NN fails to detect the presence of the RNFL because this method yields isolated patches. The last row shows an example of a subsector with no RNFL. BPNN and SVM obtained detection results similar to the ground truth. Although, SVM yielded several patches with the RNFL, all of them were isolated. Meanwhile, misclassification occurred in this case using k-NN due to two patches where it erroneously indicated the presence of RNFL.

Comparison of retinal nerve fiber layer detection result obtained using three different classifiers: (A) backpropagation neural network, (B) support vector machine, and (C) k-nearest neighbor.

The process of classification first is applied to sector I, followed by sectors S, N, and T. The order of classification is based on the fact that the presence of the RNFL is most clearly visible in sectors I and S, while RNFL detection is applied to sector T last because RNFL loss begins in this sector. Each sector is divided into three sub-sectors; however, RNFL detection does not have to be performed in all sub-sectors. For example, in sector I, if sub-sector 01 is detected as an area with no RNFL, then we must proceed to sub-sector 02. However, if sub-sector 01 is detected as an RNFL area, then RNFL detection in this sector is terminated and continued to the next sector, namely S. This implies that the processes of forming patches and classification for sub-sectors 02 and 03 in sector I are no longer necessary; therefore, the computation time for RNFL detection can be reduced. However, to evaluate the performance of the proposed method, RNFL detection is carried out on all sub-sectors in sectors I, S, N, and T, sequentially.

III. Results

The performance of the proposed automated RNFL detection method was evaluated using a total of 40 retinal fundus images provided in JPEG format and obtained from glaucoma patients. The images were acquired using two types of different tools. First, 22 images were acquired by a 45° field of view (FOV) Topcon TRC-NW8 fundus camera and digitized at 4288 × 2848 pixels. Second, 18 images were acquired by a 30° FOV Carl Zeiss AG fundus camera incorporated into a Nikon N150 digital camera and digitized at 2240 × 1488 pixels. All the retinal fundus images were collected from Dr. Sardjito Hospital and Dr. YAP Eye Hospital, Yogyakarta with the approval of the Ethics Committee (No. IRB KE/FK/1108/EC/2015).

An ophthalmologist with 21 years of experience evaluated all of the retinal fundus images to provide the ground truth. The ground truth of each retinal fundus image was derived from 12 areas (sub-sectors) and 3 sub-sectors for each sector with an angle of 30° each. Therefore, there were 480 sub-sectors as the ground truth from the whole set of images used in this research. Labels ‘1’ or ‘0’ were given for each sub-sector, where ‘1’ indicated a sub-sector with RNFL, and ‘0’ indicated a sub-sector without RNFL (RNFL loss). The idea of forming 12 sub-sectors was adopted from the division of the area of OCT images based on ‘clock-hours’. This partitioning made the RNFL detection process by the expert more convenient and more detailed because the detection area becomes more focused. The RNFL detection of 12 sub-sectors needs to be considered because the occurrence of RNFL loss in almost all sub-sectors (especially in sectors I and S) indicates that glaucoma tends to be severe. The partition of sectors into sub-sectors and the labels provided by the expert for all the sub-sectors as the ground truth are shown in Figure 6. The order of the 12 sub-sectors are the following: sub-sectors 01 to 03 are in sector I, 04 to 06 are in sector S, 07 to 09 are in sector N, and 10 to 12 are in sector T.

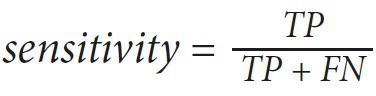

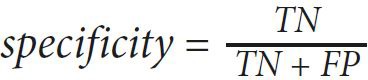

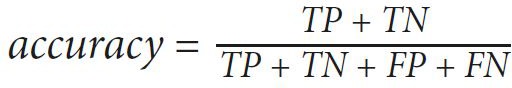

The values of sensitivity, specificity, and accuracy are used to express the performance of our proposed method. Those value are computed as follows:

(1)

(1) (2)

(2) (3)

(3)where true positive (TP) is the number of images detected as RNFL by the expert and the proposed method, true negative (TN) is the number of images detected as RNFL loss by the expert and the proposed method, false positive (FP) is the number of images detected as RNFL loss by the expert but detected as RNFL by the proposed method, and false negative (FN) is the number of images detected as RNFL by the expert but detected as RNFL loss by the proposed method. Those values lie between 0 to 1, and an accurate method should have values close to 1.

An example RNFL detection result for two fundus images performed by the ophthalmologist as ground truth (GT) and the proposed method (PM) in sub-sectors 01 to 12 is shown in Figure 7. Examples of erroneous false negative and false positive detections are shown in sub-sector 07 (Figure 7A) and sub-sector 11 (Figure 7B), respectively. Figure 7A shows a false negative RNFL detection result that occurred in sub-sector 07 because it is the boundary area between sectors T and S, where the RNFL texture tends to be indistinct. Furthermore, a false positive occurred in sub-sector 11, as shown in Figure 7B, because in that area, the texture is similar to mild RNFL.

Examples of erroneous retinal nerve fiber layer detection results obtained by the proposed method: (A) false negative and (B) false positive. GT: ground truth, PM: proposed method.

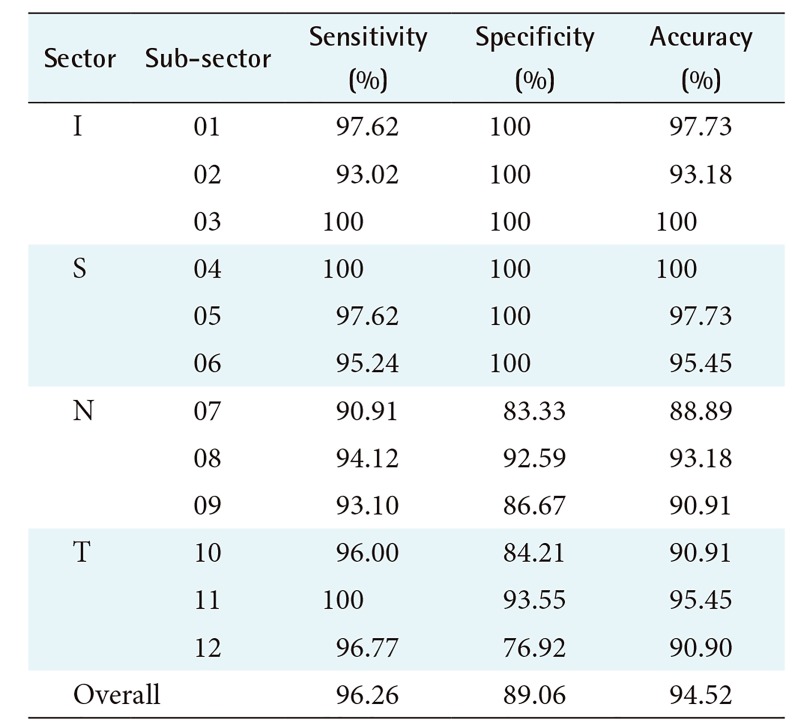

The performance of the proposed method, expressed by the values of sensitivity, specificity, and accuracy, is reported in Table 1. Based on Table 1, the sub-sectors of sectors I and S show the highest accuracy of 100%, followed by sectors T and N with 95.45% and 93.18%, respectively. This matches our observation result based on the structure of the RNFL, namely that sectors I and S are the thickest and therefore have apparent texture. In sectors T and N, the sub-sectors mostly have the texture of mild RNFL, especially in the subsectors that are adjacent to sectors I and S, therefore accuracy decreases in both sectors.

The lowest value of accuracy in sectors N and T occurs in sub-sectors that are adjacent to the neighboring sectors of I or S. In this case, low accuracy occurs in sub-sectors 07 and 12 due to the gradual depletion of RNFL structures occurring in these sub-sectors. Sector T, especially in sub-sector 11, shows a high value of accuracy because total RNFL loss often occurs there. The result of RNFL detection is influenced by the RNFL structure in each sector as well as the accurate selection of RNFL or RNFL loss areas to form the sample images used for training data.

The performance of our proposed method using BPNN and other classifiers (k-NN and SVM) is reported in Table 2. Based on Table 2, it is clear that BPNN achieves optimal performance. The BPNN classifier achieves 92.31% accuracy with 95.44% sensitivity and 82.91% specificity. This result indicates that our proposed method, which uses two features selected with BPNN followed by discarding the isolated patch can be considered as a contribution in RNFL detection for its high level of accuracy.

Comparison of classification results based on the proposed features obtained using various classifiers

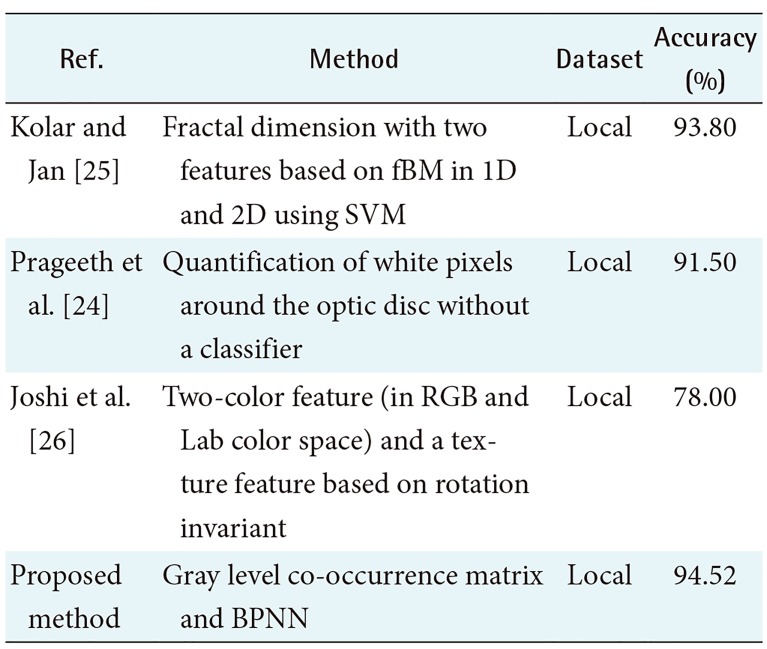

Furthermore, we summarized several methods regarding the extraction of RNFL feature on the fundus images to give an insight into the development of the extraction feature for RNFL detection, which is reported in Table 3. Table 3 shows that there have only been a few studies related to this issue, and all of them have used their own local dataset to evaluate their method. The detection of RNFL by the proposed method achieves 94.52% accuracy. This surpasses the results of several previous works [212223], which achieved 93.8%, 78%, and 91.5% accuracy, respectively. Moreover, the approach proposed in [22] is limited to the extraction area by discarding the area of the blood vessels, and their sample images are obtained manually. This is different from our research, where we consider the blood vessels area as the potential pattern that is easily identifiable because the presence of RNFL overlaps some part of the blood vessels. The quantification of white pixels in [21] and rotation invariant using a local binary pattern in [23] have limitations for implementation in sector N and T. In those sectors, the texture of the RNFL tends to be too indistinct; therefore, the RNFL may be detected as the background. This issue can be overcome using features from GLCM by taking several sample images from all sub-sectors along with BPNN as a classifier; these method have been widely used to solve many cases of medical image classification.

IV. Discussion

An automated RNFL detection method by texture-based analysis has been proposed. The forty texture features are extracted using GLCM in RGB space. Those features are energy, contrast, correlation, homogeneity, entropy, autocorrelation, dissimilarity, cluster shade, cluster prominence, and maximum probability, which are extracted in four directions 0°, 45°, 90°, and 135°. Subsequently, feature selection by the CFS method is conducted to reduce the dimensions of the features. The feature selection step produces only two features, namely, autocorrelation 0° and correlation 90°. Then, we apply the BPNN method to classify the sub-sector based on the selected features. Our proposed method can distinguish which sub-sectors of a fundus image contain the RNFL or RNFL loss. The performance of our proposed method was evaluated using several retinal fundus images. A comparison with several previous methods, summarized in Table 3, shows that our method achieved a relatively high accuracy of 94.52%. Table 3 shows that the accuracy of our method surpasses the performance of previous methods [212223]. Moreover, our method only used two set of features that were smaller than those of the other methods; therefore, those features are implemented in our proposed method. The result demonstrate that our method successfully detects the part of the RNFL that overlaps the blood vessels. Nevertheless, the misclassification of the RNFL often occurs in sub-sectors 07, 09, 10, and 12 because our method is unable to handle the gradual depletion of RNFL structures occurring in these sub-sectors.

Several works related to the medical image classification have been conducted using deep learning, including [29] and [30], which used fundus image for different purposes. Deep learning has been used to detect red lesions in diabetic retinopathy evaluation and achieved an AUC of 0.93 using the MESSIDOR dataset in [29]. It has also been used to detect glaucoma with accuracy of 98.13% using 1,426 images in [30]. For future work, RNFL detection using the deep learning approach would be interesting to study since this method has shown great potential for extracting features and learning patterns from a large number of images.

In conclusion, we proposed a method of automated RNFL detection by texture-based analysis of the retinal fundus images using only two features (autocorrelation 0° and correlation 90°) and the BPNN classifier. The evaluation of our method showed that it achieved an accuracy of 94.52%. This result indicates that the two proposed texture features are suitable for RNFL detection. Our proposed method may support the ophthalmologist to diagnosis glaucoma based on retinal fundus images due to RNFL loss, indicating that the disease is becoming severe. It can also contribute to medical imaging analysis for glaucoma evaluation.

Acknowledgments

This research is funded by RISTEKDIKTI Indonesia (Grant No. 405/UN17.41/KL/2017). The authors would like to thank Dr. Sardjito Hospital and Dr. YAP Eye Hospital in Yogyakarta Indonesia for providing the retinal fundus images as well as clinical data to test our proposed method.

Notes

Conflict of Interest: No potential conflict of interest relevant to this article was reported.