Video Archiving and Communication System (VACS): A Progressive Approach, Design, Implementation, and Benefits for Surgical Videos

Article information

Abstract

Objectives

As endoscopic, laparoscopic, and robotic surgical procedures become more common, surgical videos are increasingly being treated as records and serving as important data sources for education, research, and developing new solutions with recent advances in artificial intelligence (AI). However, most hospitals do not have a system that can store and manage such videos systematically. This study aimed to develop a system to help doctors manage surgical videos and turn them into content and data.

Methods

We developed a video archiving and communication system (VACS) to systematically process surgical videos. The VACS consists of a video capture device called SurgBox and a video archiving system called SurgStory. SurgBox automatically transfers surgical videos recorded in the operating room to SurgStory. SurgStory then analyzes the surgical videos and indexes important sections or video frames to provide AI reports. It allows doctors to annotate classified indexing frames, “data-ize” surgical information, create educational content, and communicate with team members.

Results

The VACS collects surgical and procedural videos, and helps users manage archived videos. The accuracy of a convolutional neural network learning model trained to detect the top five surgical instruments reached 96%.

Conclusions

With the advent of the VACS, the informational value of medical videos has increased. It is possible to improve the efficiency of doctors’ continuing education by making video-based online learning more active and supporting research using data from medical videos. The VACS is expected to promote the development of new AI-based products and services in surgical and procedural fields.

I. Introduction

As endoscopic, laparoscopic, and robotic surgical procedures become more common, surgical videos are increasingly being treated as records [1,2]. These surgical and procedural videos have become important sources of data for education, academic research [3], and developing new solutions with recent advances in artificial intelligence (AI) technology. However, most hospitals do not have a system to store and manage such videos systematically. After surgery, videos are often copied from the surgical device onto a USB and then stored on a PC or an external hard disk. Thus, it is difficult for doctors to search for data and create content in surgical videos. Surgical videos that contain various aspects of surgical knowledge are highly valuable for research purposes. In this paper, we introduce a video archiving and communication system (VACS), which was developed through a research collaboration between MTEG Co. Ltd. and Gachon Medical Device R&D Center, as an effective solution for collecting, managing, and utilizing surgical videos.

II. Methods

The VACS (Figure 1) consists of two components: a video capture device called SurgBox, and a video archiving system called SurgStory. SurgBox captures video from the operating room and transfers it to a server. SurgStory has two systems: a cloud-based system, and an on-premise server-based system. The on-premise server-based system utilizes physical servers in the hospital. SurgStory transcodes and stores videos in a structure capable of streaming. This work focuses on the management and utilization of surgical videos.

Architecture of the video archiving and communication system. HTTP: Hyper-Text Transport Protocol, FTP: File Transfer Protocol.

1. Video Capture Device

SurgBox (Figure 2) is connected to a surgical device in the operating room. After surgery, it automatically captures and transfers surgical videos to the cloud or on-premise server. The hardware specifications of SurgBox are listed in Table 1.

2. Video Archiving System

SurgStory is a collective term for the archiving system and functions that help doctors create content and research data using the VACS. As seen in Figure 1, it consists of two parts: a cloud-based (surgstory.com) server and an on-premise server.

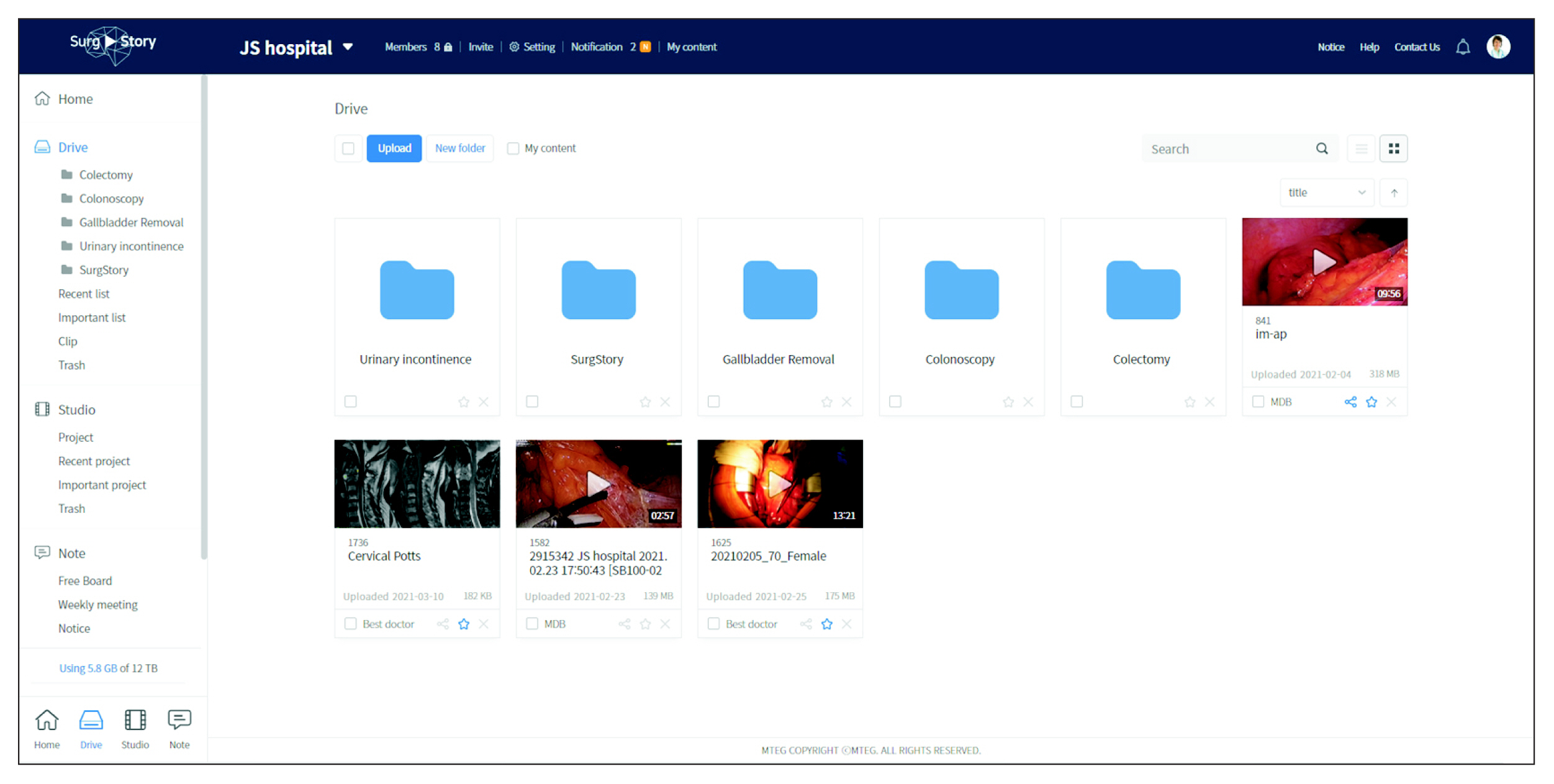

As shown in Figure 3, the video manager of SurgStory manages surgical videos. A user can input and change information (e.g., the type of surgery, surgery date, and the patient’s sex, age, and diagnosis) and upload attachments. Users can create, share, copy, move, and delete video files and folders, and extract important sections and scenes from surgical videos and annotate or mark them. SurgStory provides a keyword search function that allows users to search for words, phrases, or sets of words written as annotations, thereby reducing the time it takes to find important sections and scenes, and share them with other users. The hardware specifications of the on-premise server of SurgStory are listed in Table 2.

3. Applications

The functions in SurgStory include team communication, web-based video editing, and AI reports. We are continuously developing and applying these functions depending on the requirements of doctors and hospitals.

1) Team communication

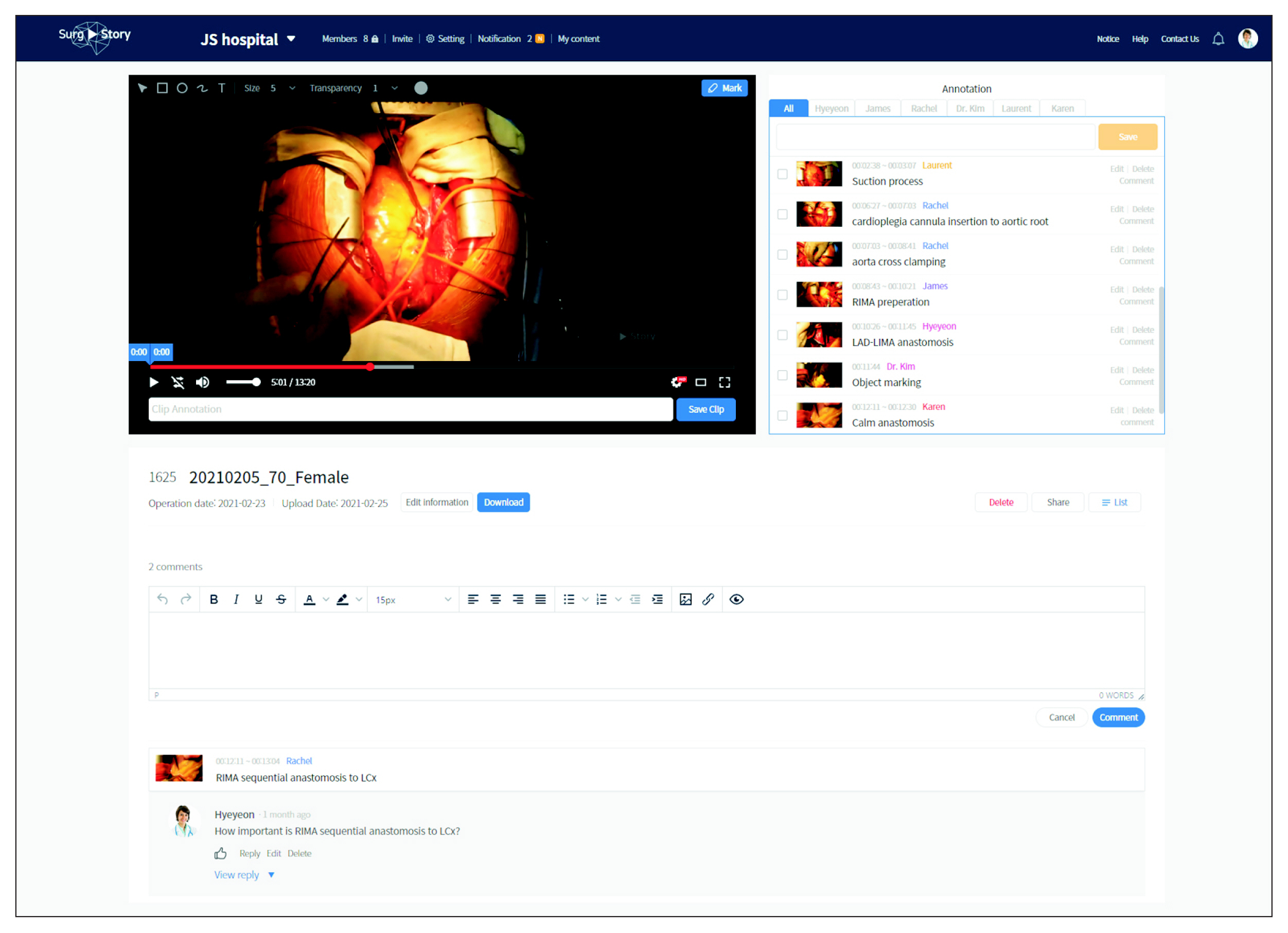

Users can create a team and invite other users as team members. They can upload surgical videos to the team storage space. They can view team members’ annotations and markings, write comments, and exchange questions and answers inside SurgStory (Figure 4).

2) Web-based video editor

SurgStory includes a web-based video editor. Users can edit videos and insert slides, pictures, and text. They can search for annotated sections using the keywords they used in the annotations, and add to the timeline of a new video being edited (Figure 5). Edited videos can be made available for academic conferences, meetings, and education.

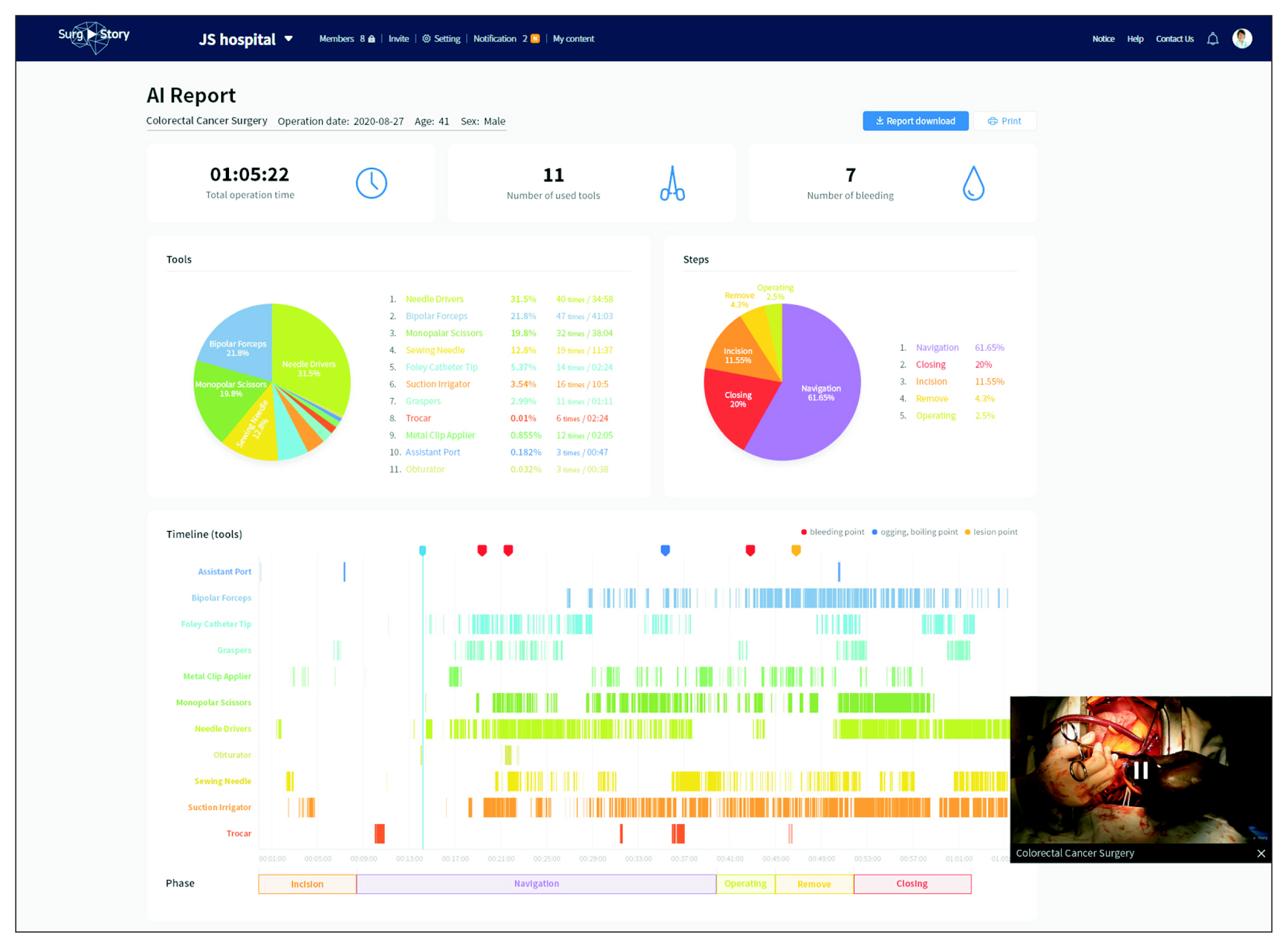

3) AI reports

Users can receive AI reports about their surgical videos. We developed a convolutional neural network (CNN)-based object detection model. We used five types of surgical procedures to train AI solutions: gastrectomy, colectomy, myomectomy, prostatectomy, and appendectomy. The CNN model was trained to automatically detect surgical instruments, lesions, organs, bleeding, and events in the surgical video. When a user wants to analyze a surgical video, he or she can request an analysis by clicking a detection button in SurgStory. A server with the AI model analyzes the video and creates a log file. SurgStory visualizes the log file and provides it to the user as an AI report (Figure 6). The AI report shows the frequency and proportion of use of surgical instruments. The timeline of the report includes the use of each instrument and points where bleeding, lesions, or fogging was detected. Users can download and print AI reports. AI reports can be made available for publishing academic papers and case studies.

III. Results

We designed a VACS that consists of two parts: a video capture device (SurgBox) and a video archiving system (Surg-Story). The VACS collects surgical and procedural videos and provides the following functions to apply to surgical videos: archiving system, team communication, video editing, and AI reports. We trained the AI on 52 instruments and over 180,000 object counts. The AI divides sections of a surgical video into the incision, operation, and closing sections. It can detect the top five surgical tools with an accuracy of over 96% (robotic monopolar scissors, robotic bipolar forceps, robotic graspers, suction irrigators, and LigaSure (Medtronic, Minneapolis, MN, USA). It can detect other objects with an accuracy of 50%, including retained food, retained saliva, esophagus white lesion, and a dilated esophagus. The difference in accuracy between surgical tools and other objects is caused by the imbalance in the amount of data.

IV. Discussion

The VACS has been installed at several university hospitals such as Seoul National University Hospital, Ajou University Hospital, and Gachon University Gil Medical Center. Raphael International, a medical support corporation, uses SurgStory as a tool for Korean doctors to educate and communicate with doctors in Myanmar. To develop AI reporting solutions, we trained a CNN model to detect instruments, lesions, organs, bleeding, and events. Depending on the quality and quantity of videos, the detection accuracy can range from 55% to 96%. We need a continuous supply of high-quality surgical videos to train AI models. To improve the object detection accuracy, we are developing a mask region-based CNN model. As SurgStory is a video-based platform, privacy issues can be raised. We supply the system and can access the data only at the request of the hospital or a doctor. The surgical videos belong to the respective doctor and hospital, not to SurgStory. The information contained in the system is controlled and managed in compliance with the internal regulations of the hospital. The ultimate goal is to provide a service that automatically converts overall surgical information into time-series data (e.g., instruments, bleeding, lesions, and fogging), which can serve as an archive recording the doctor’s actions. The VACS is expected to become a regularly-used tool for doctors who want to teach surgical techniques or improve their surgical skills.

Notes

Conflict of interest

Kwang Gi Kim is an editorial member of Healthcare Informatics Research; however, he did not involve in the peer reviewer selection, evaluation, and decision process of this article. Otherwise, no potential conflict of interest relevant to this article was reported.

Acknowledgments

The authors thank MTEG staff members, especially Jisook Kim and Janghyun Nam, for their endeavors and efforts in developing the VACS. This research was supported by the Gachon University (No. 2018-0669) and the IITP (Institute for Information & communications Technology Promotion), and Institute for National IT Industry Promotion Agency (NIPA) grant funded by the Korea government (MSIT) (No. A0602-19-1032, Intelligent surgical guide system & service from surgery video data analytics).